Peering into your heart – with the help of AI

Portable ultrasound devices can bring high technology imaging to your doctor’s office, to emergency vehicles, and to lesser-developed countries. A new AI tool helps make these devices easier for a wider range of health professionals to use.

A handheld, portable ultrasound probe that’s the size of a fat window squeegee can peer inside your heart – but this amazing piece of technology still requires highly trained medical personnel to operate it.

Now, researchers have developed a new AI tool that helps medical personnel position the device properly so that they can see if the heart is normal or not.

That could mean that medical personnel who aren’t ultrasound experts could use the device, in your doctor’s office, in an emergency vehicle, or in countries where skilled ultrasound clinicians are in short supply.

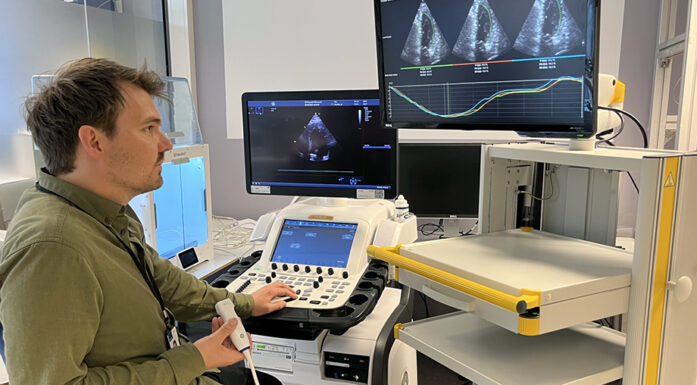

“We’re trying to simplify echocardiography for non-expert clinicians, so we can spread it from the hospital out into the world,” said David Pasdeloup, a researcher at NTNU’s Centre for Innovative Ultrasound Solutions (CIUS) whose PhD research involved developing the tool.

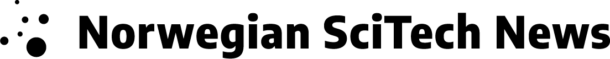

Here’s what the AI tool looks like when in use. The image in the upper right-hand corner shows a cross section of the heart. The red box is where the sonographer should position the probe to get the best image of the heart. Here the AI tool is giving the operator feedback that the probe is tilted. The operator can then shift the probe’s position so that the red box turns green. Screenshot: David Pasdeloup/NTNU

Echocardiography is the use of ultrasound to collect images of the beating heart. It can be used for quick assessments in emergency situations, for more detailed diagnoses, or, over the longer term, to monitor the heart’s function over time when a patient is undergoing chemotherapy, for example.

“Chemotherapy can be toxic for the heart,” Pasdeloup said. “So if echocardiography shows that the heart function has worsened since the last visit, doctors may decide to stop that treatment.”

- You might also like: Why failing hearts love hard workouts

Imaging the beating heart isn’t so easy

To understand what Pasdeloup did, you need to understand why using ultrasound to image the heart is so difficult to begin with.

The heart is one of the most difficult organs for ultrasound. You only see a 2-D image on the screen, but the heart is 3-D

First of all: the human heart is protected by our ribs and surrounded by our lungs. Both the lungs and the ribs get in the way and don’t allow ultrasound waves to “propagate,” which essentially makes it very difficult to image the heart clearly.

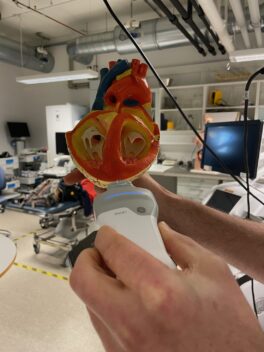

Håvard Dalen holds a plastic model of a heart that has been cut away so the interior chambers are visible. He holds a ultrasound probe against the model to show how it is typically held during use. Photo: Nancy Bazilchuk

“The heart is one of the most difficult organs for ultrasound. You only see a 2-D image on the screen, but the heart is 3-D. So you really need a good mental image of what is inside the body to place the probe properly, and at the same time you have to avoid the ribs. This makes it extremely challenging,” Pasdeloup said.

If the probe is a little too high, a little too low, or angled improperly, the result will be less-than-optimal videos and images. Skilled clinicians can overcome this challenge by positioning the probe in the absolutely best spot to get a good cross section of the heart as it beats, but this takes considerable skill.

But that’s not the only challenge, says Håvard Dalen, a cardiologist at St. Olavs Hospital and professor at CIUS.

“It’s the one organ which is constantly moving,” he pointed out. “Not only the heart itself is moving, but every time you take a breath you change the position a bit.”

- You might also like: More important for heart patients to be active than thin

Hundreds of ultrasounds to teach the AI

Pasdeloup addressed these different challenges by collecting hundreds of different cardiac ultrasound recordings of varying levels of correctness. He fed these recordings to his AI tool to show it what correct and incorrect images actually looked like.

Eventually, the AI tool was able to tell whether the image was good or not. If the probe was positioned incorrectly, the AI tool could help the user move the device to improve the image.

This graphic shows the different types of feedback that users can get from the AI tool that Pasdeloup and his colleagues have developed. Source: Eur Heart J – Imaging Methods Practice, Volume 1, Issue 2, September 2023, qyad040, https://doi.org/10.1093/ehjimp/qyad040. Copyright by the Authors 2023. CC-BY-4.0

“Simplified systems like this handheld ultrasound scanners, they have only two-dimensional imaging, so you have to know where in the three- dimensional heart this two-dimensional image originates from. And the AI tool is able to tell you,” Dalen said.

Real-time guidance on a tablet

The AI tool helps operators figure out how the probe should be held so that clinicians could get an optimal picture of the important structures of the heart.

The tool is a piece of software that can run on something as simple as a tablet. The ultrasound operator can look at a tablet screen while moving the probe on the patient’s body.

The screen shows the beating heart, as imaged by the device, in its centre. Feedback from the AI is displayed in the upper right corner. It is updated in real-time as the operators move the device, so that they can position it to get the correct image.

Helps even skilled ultrasound clinicians

The motivation behind Pasdeloup’s research was to make portable ultrasound devices like the handheld scanner widely available.

But in the process of doing his research, he and his colleagues realized it could be equally useful for skilled clinicians, because it helped standardize the images they captured.

“What we found is that the experienced users, like our sonographers, who have conducted thousands of exams, still improve,” Dalen said. “If you think about it, experienced sonographers each have a kind of personal signature when they do their imaging. So for me, when I see a video, I can more or less tell who has actually done the recording.”

Pasdeloup’s tool essentially erased those differences, Dalen said.

“With David’s innovation, I’m not able to say who has done the imaging. And that makes it possible to switch between different operators without seeing operator-related changes in the images,” he said.

When patients come in to see if a heart problem has changed over time or to see if their heart is affected by cancer treatment, it’s easier for cardiologists like Dalen to actually detect small but important changes, he said.

“We are looking for quite small details and differences,” he said.

Can help cut time for more detailed studies, too

The researchers also looked at how AI could help make measurements from these standardized images of the heart.

Here’s what the display looks like when the ultrasound operator has the probe in the correct position. Photo: Nancy Bazilchuk/NTNU

Two of the most important measurements that cardiologists routinely look at are the heart’s left ventricular volume and ejection fraction.

The left ventricle is the chamber of the heart that pushes the blood out of the heart to all of the organs in the body. The ejection fraction tells how efficient the heart is at pumping the blood on each beat.

What’s most important to know about these two factors is that they help cardiologists understand how well the heart is working – or not.

During an ultrasound examination, an operator can manually measure the left ventricular volume and the ejection fraction, but it takes time, and the accuracy of the measurement is limited by the subjectivity of the operator.

So Pasdeloup was part of a team that looked at how AI could automatically calculate these values from the images that were collected during an ultrasound.

What they found was that the AI was comparable to skilled operators when it came to the measurements – but also that it saved on average 5 minutes for each scan. That’s something that could make a difference in both cost savings and everyday workflows for skilled operators who perform these tests.

Not quite ready for the doctor’s office – yet

Pasdeloup says in spite of the promising results of his work, it will still take time for the innovation to find its way to your doctor’s office.

The Centre for Innovative Ultrasound Solutions is a Centre for Research-based Innovation, financed in part by the Research Council of Norway. The rest of the funding comes from industry partners, such as GE Vingmed Ultrasound, a Norwegian company that makes the handheld device that Pasdeloup used to developed his software.

“We really believe in the clinical relevance of our tool and hope our industrial partner will choose to integrate it into their scanners,” said Pasdeloup.

That’s just one step. The other is getting regulatory approval for an AI-related product, he said.

In their first clinical study, the research team tested the AI tool in a hospital setting. “The tool worked on all the patients we included. It was very positive to see that we managed to successfully transfer our research work to the clinical setting,” says Pasdeloup.

That kind of proof is important in getting approval, but the complicating factor is the use of AI itself.

“This is an AI product, and that is very new for regulatory agencies,” he said. “It will take time to get it approved the Food and Drug Administration in the US or to get a CE mark in the European Union. So there can be a long time from innovation to clinical implementation and commercialization.”

Centre for Innovative Ultrasound Solutions

The Centre for Innovative Ultrasound Solutions for health care, maritime, and oil & gas (CIUS) brings academia and industry together to address needs and challenges across all three sectors.

The Centre for Innovative Ultrasound Solutions for health care, maritime, and oil & gas (CIUS) brings academia and industry together to address needs and challenges across all three sectors.

Contact: Svein-Erik Måsøy, centre director

References:

Pasdeloup, David Francis Pierre; Olaisen, Sindre Hellum; Østvik, Andreas; Sæbø, Sigbjørn; Pettersen, Håkon Neergaard; Holte, Espen; Grenne, Bjørnar; Stølen, Stian Bergseng; Smistad, Erik; Aase, Svein Arne; Dalen, Håvard; Løvstakken, Lasse. Real-Time Echocardiography Guidance for Optimized Apical Standard Views. Ultrasound in Medicine and Biology 2023 ;Volum 49.(1) s. 333-346 https://doi.org/10.1016/j.ultrasmedbio.2022.09.006

Pasdeloup, David. Deep Learning in the Ecocardiology Workflow: Challenges and Opportunities. NTNU PhD Thesis, Faculty of Medicine and Health Sciences, Department of Circulation and Medical Imaging, 2023 https://ntnuopen.ntnu.no/ntnu-xmlui/handle/11250/3108433

S Olaisen, E Smistad, T Espeland, J Hu, D Pasdeloup, A Ostvik, B Amundsen, S Aakhus, A Rosner, M Stylidis, E Holte, B Grenne, L Lovstakken, H Dalen, Automatic measurements of left ventricular volumes and ejection fraction by artificial intelligence reduces time-consumption and inter-observer variability, European Heart Journal – Cardiovascular Imaging, Volume 24, Issue Supplement_1, June 2023, jead119.431, https://doi.org/10.1093/ehjci/jead119.431

Sigbjorn Sabo, David Pasdeloup, Hakon Neergaard Pettersen, Erik Smistad, Andreas Østvik, Sindre Hellum Olaisen, Stian Bergseng Stølen, Bjørnar Leangen Grenne, Espen Holte, Lasse Lovstakken, Havard Dalen, Real-time guidance by deep learning of experienced operators to improve the standardization of echocardiographic acquisitions, European Heart Journal – Imaging Methods and Practice, Volume 1, Issue 2, September 2023, qyad040, https://doi.org/10.1093/ehjimp/qyad040