Building computers the way our brains work

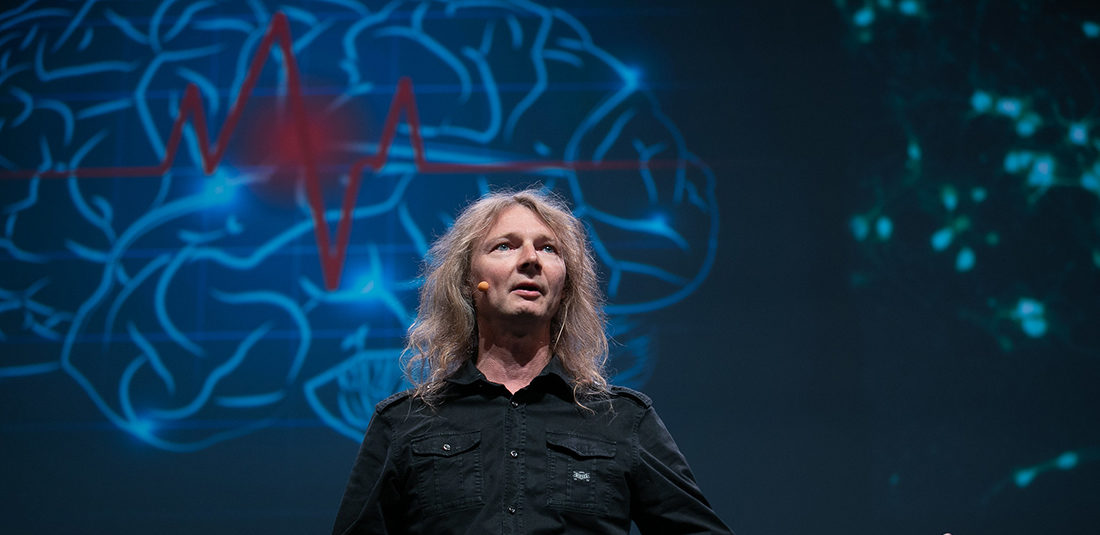

We are approaching the limit for how much more microprocessors can be developed. Gunnar Tufte proposes building computers in a completely new way, inspired by the human brain and nanotechnology.

Gunnar Tufte is a professor of computer technology, but his research has taken him in some surprising directions. He’s now head of a project that is rethinking how tomorrow’s computers should be built — inspired by neuroscience and physics.

Tufte calls computers a miracle in the modern world but thinks their transistors are approaching retirement age.

“It’s time to rethink computers. In principle, they’re still being built the same way they were 60-70 years ago,” says Tufte.

Tufte believes that the structure of the human brain can inspire the architecture for the computers of the future: self-organizing and built from non-traditional materials.

He’s not talking about a cyborg, which is a mix of technology and biology.

For over 50 years, microprocessor speeds have doubled every two years. Tufte believes it won’t be possible to keep up that aggressive pace much longer. Reducing the number of components makes machines unreliable. Increasing the number of parts makes them energy intensive. A typical data centre consumes as much power as 40 000 households, and the machines’ increasing complexity makes them too expensive to manufacture.

Look to the brain

The NTNU professor believes the brain has characteristics that computers should have.

“The brain provides stable performance even though the parts are unstable, it requires very little energy and has a self-organizing design process. If we manage to transfer properties like these from neural networks to computers, we’ll be able to revolutionize the way we make computers,” he said.

Tufte explains that the brain does a lot of the same tasks as a computers does: it processes information, exercises control and has memory. But the structure is completely different. Brain cells are self-organizing, and they make their own architecture and adapt constantly, without any overall plan, he said.

“A cell is both constructed and constructor. Neural networks are complex but start out simple. The organism adapts to the environment and the world. When we construct machines it’s the opposite,” he said. “We build a computer of parts that are precisely planned and produced, and they’re assembled according to a large plan to do a specific task. The machine is complicated from the start, but doesn’t have the ability to develop.”

- You may also like: Nine things to know about microplastics

The art of learning

And whereas we have to program a computer to perform new tasks or adapt to other technologies, the brain has the ability to learn.

He says constructing this type of computer will require completely different hardware than is used in today’s machines, an idea that is being pursued in a five-year research project that ends in 2022 partially funded by the Research Council of Norway called SOCRATES.

Nanomagnets might offer an approach, for example.

“Magnets are easy to make, and they’re easy to scale because they’re so simple and require little energy. By enabling self-organization, we aren’t dependent on the individual component. One or more components can differ without the result being incorrect,” he says.

- You may also like: The women behind the periodic system

Nanomagnets already here

Nanomagnets have been produced in the NTNU Nanolab, and Tufte and his group are running simulations of how magnets can behave in a self-organizing fashion.

The researchers are collaborating with colleagues at ETH in Switzerland, the University of Sheffield, the University of Ghent, Oslo Metropolitan University and the University of York. Interest in financing research on alternatives to the silicon processor has taken off in the last five to six years, Tufte says. Internationally, current research includes using carbon nanotubes and various molecular solutions.

- You may also like: New ears from algae

Ignores small errors

But how can results be correct if the hardware is allowed to fail?

“Errors occur when you scale down. So you have to compensate with technology that will detect errors. At some point, you’ll end up using more resources on discovering errors than solving the problem. The brain has an underlying self-organization that doesn’t depend on whether a single brain cell is reliable. We have to try to copy that,” Tufte says.

Tufte says the world would surely survive even if today’s computers don’t become more powerful, but that developing more efficient computers has clear environmental consequences, on top of everything else.

“We would avoid imploding the planet. But the impact on economics and politics would be huge. Everything is based on growth. Personally, I would see it as a huge advantage if growth stopped. We have to reduce consumption,” says Tufte.