Teaching robots to see and feel

Newly developed technology has given robots the ability to learn new skills, enabling them to perform complex tasks and work alongside humans. This innovation can benefit many crucial societal functions, such as food production

More and more industrial tasks are being performed by robots, but human operators are still needed for the more complex manipulation actions, such as handling and processing food products.

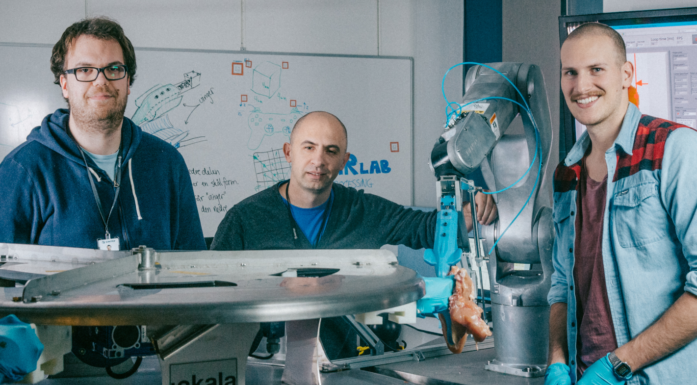

“If our aim is to automate some or all these tasks in the food industry, or in other areas, we have to equip the robots with new knowledge via learning. They have to learn the so-called soft skills first so that they will be able to execute operations at the same level as humans in the future,” explained Ekrem Misimi, who is a SINTEF researcher developing robot learning technology as part of the iProcess project.

In order to teach the robots these complex manipulation skills, a combination of visual and tactile learning is required. In other words, they must learn to see and feel simultaneously.

Ekrem Misimi in the lab, where the robot is about to grasp a cherry tomato that it has never seen before. Image: TYD

Robot learning may also be useful on a larger scale, particularly now during the pandemic when many people must work from home or are unable to work in their plants due to a risk of infection:

“For society, the production, harvesting, handling and preparation of food products are crucial functions. Our technology aims to ensure a fully automated production line, based on intelligent robots. Essentially, intelligent robot technology can better prepare us as a society to cope with the bad times, and streamline production and value creation in good times,” said Misimi.

The possibilities are endless

The interaction between a robot and objects that are soft, fragile, pliant or malleable is one of the greatest challenges in robotics today, as these types of objects can easily change their shape and form when handled. It is easy for human operators to compensate for these changes in real time, but robots require advanced visual and tactile sensors in order to do the same.

Therefore, the robot is given artificial “eyes” in the form of 3D vision, an artificial “brain” from artificial intelligence, and sensitive “hands” that rely on force and tactile sensing.

“These qualities enable the robots to develop a task-specific intelligence that is good enough for them to do the job automatically,” explained Misimi.

Learning complex tasks using simple examples

Despite its capacity for learning, a robot is ultimately a machine. Therefore, it must first gain knowledge about tasks that it is to complete through sensing and learning, either in interaction with humans or by itself.

“Our aim is to get the robot to learn how to perform real-world complex manipulation tasks from simple examples,” said Misimi.

Therefore, the iProcess project has developed two robot learning methods. The first is “learning from demonstration” (LfD), wherein the robot learns to grasp soft food items through a combination of visual and tactile sensing. The second is “learning from self-exploration”, wherein the robot uses artificial intelligence to learn the task on its own in a simulated environment before finally being deployed to the real world, without any additional fine tuning. The project has generated many interesting assignments for graduate students from NTNU studying artificial intelligence and robotics.

“A typical challenge in robot learning is that the human operator, or rather the teacher, demonstrates the task incorrectly to the robot. Therefore, we have developed a learning strategy that is based solely on the best demonstrations, and automatically disregards the poor ones, that are inconsistent to the teacher’s intended policy. The learning strategy uses 3D imaging for the correct positioning of the robot gripper and tactile sensing for the gentle handling and grasping of the objects,” explained Misimi.

“What is particularly interesting about learning from self-exploration is that the robot has never seen a fillet of salmon before, either in a simulated or real environment. But it still manages perfectly well to generalise in the real world in order to handle the new, unknown objects,” he added.

When the robot learns in this way, the learning time is shortened considerably, and the robot can be used to handle multiple food products, or similar objects, without any additional programming.

The research on LfD was published in “Robotic Handling of Compliant Food Objects by Robust Learning from Demonstration”, which was presented at the International Conference on Intelligent Robots and Systems, while the article on learning by self-exploration has been accepted for the upcoming International Conference on Robotics and Automation (ICRA) 2020*.

Teaching an old robot new tricks

They say you can’t teach an old dog new tricks – but robots can be trained for many different handling tasks, from holding both stationary and moving objects to performing more complex manipulation tasks that require greater dexterity, such as manipulating moving objects.

“The task could be anything that involves cutting or grasping objects that need to be handled gently. Whether it’s a fish fillet or lettuce, the robot must be delicate enough to not damage the products, but still get the job done,” said Misimi.

Important for the food industry

The new technology will be important for both the Norwegian food industry and any other industry that would benefit from the robotic handling of pliant and malleable objects and that is completely dependent on automation to retain value creation in Norway.

“This project is a milestone in realising this vision. Robot technology will be able to increase both competitiveness and profitability and will enable a greater proportion of raw food material to be processed in Norway. This can contribute to increasing the products’ quality and reducing food waste. Moreover, this will benefit the environment, as raw materials will not have to be transported abroad for refinement, as often must be done today,” said Misimi.